Photo by Markus Winkler on Unsplash

Where the hell is my data?

Understanding registers, cache, memory and more

Table of contents

Imagine that it’s the night before your exams. You were partying hard the entire semester. And now you realize that you could seriously fail your Operating systems exam tomorrow. Oops. You dash to the college library.

Your phone is distracting, so it’s best if you keep it at home. You go and look for all operating systems books that you can find. There are tens of them, so you take a couple books and bring them to your table. You open the book with the dinosaur on the cover because it looks the least intimidating. Time to start reading.

You’re reading the book line by line, and you encounter a word you don’t understand. What in the world is a Nutex? You’re a smart kid, so you realize the author must’ve added the context nearby. So naturally, you look around the page for more information. But you can't find any. So you ruffle up the pages and search for the context in the chapter. Maybe the chapter summary has something?

Dang it. You can’t find any. You skim through the book trying to find what it means but to no avail. You’re frustrated at this point, but you’re adamant. You open another OS Book. This one has a circus on the front cover. Still nothing. What in the world? This is such a time sink. You take a deep breath. You’ve got an important exam tomorrow. You’ve done nothing up to this point. It’s best if we leave this for now. We can always get back to it later. So we’re going to leave this right?

You go to the shelves and pick up books and skim through them one by one. You’ve got a million books sprawled on the floor like a crazy professor in a movie. The librarian is horrified when she sees you and asks you to explain yourself. You tell her about your problem, and how you can’t really Google stuff because you left your phone in your room. She points to the library computer.

Of course! How did you forget about the library computer? Well, this is your first time here because you were out partying all semester, but whatever. You scramble across the room and type in “nutex in operating systems” into the Google search. Google only returns:

Congratulations. You just wasted an hour on a spelling mistake.

Now, I realize this story was a bit far fetched, but I need to make a point here. Did you notice the structure you used to find information you didn’t have? First you looked for information in the same page, then the same chapter, same book, different book, and finally in the library shelves. It’s just more efficient that way. You first look for information where you’re likely to find it in a minimal amount of time. If you don’t find it there, you’ll look for it somewhere else. This is an important concept that is also used by computers. Let us see how.

Registers

A register is the fastest, and smallest storage medium on the menu today. Remember how you were reading that Operating systems book line by line? Similarly, a processor executes instructions in a program, line by line. An instruction could be, say, adding two numbers, or fetching data from the RAM (more on that later).

You can think of the registers as the place where the CPU will store the data on which is working on JUST now. While reading the book, you will have to focus on 2/3 concepts at a time. You need to focus to keep them in your head. And on turning the page, you’re going to have to focus on new concepts, and keep those new concepts in your head instead. Similarly, a CPU will keep the data on which it is currently working on, in it’s registers. In modern computer systems, register values can change billions of times in a second.

Cache

Have you ever learnt a concept at night, felt like you understood it, and completely forgot it in the morning? It’s the same way with registers (except, it’s by design). They’re fast, but they can’t hold much data. This is where a cache comes in.

See, when you read about a “nutex” and realised that you don’t know what it means, you looked for the data in the same page. When you couldn’t find anything there, you searched for it in the chapter you were reading. When even that didn’t work out, you looked for the word in the entire book. This is, in essence, what the L1, L2, and L3 cache is in a processor.

The L1 cache is small, but the fastest of the three caches. The processor needs data to work with, say, it needs to know which two numbers you want the CPU to add. If the data is not present in one of it’s registers (registers are small) , then it looks for it in the L1 cache.

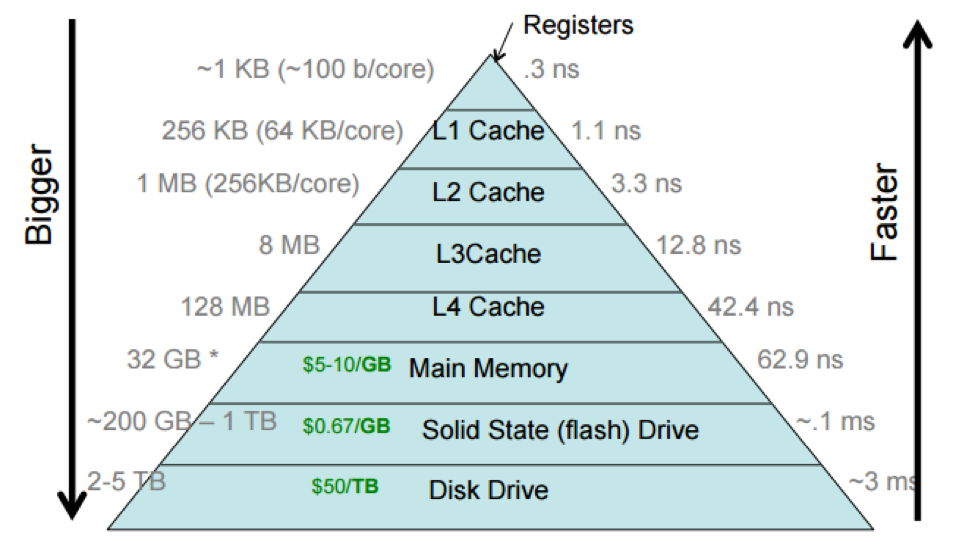

If the data is not found in L1 cache, it looks for it in the larger, but slower, L2 cache. If it’s not found there either, it’s looked for in the even larger, but even slower, L3 cache. Generally, L1 cache is in kilobytes, the L2 in megabytes, and the L3 in tens (or hundreds) of megabytes

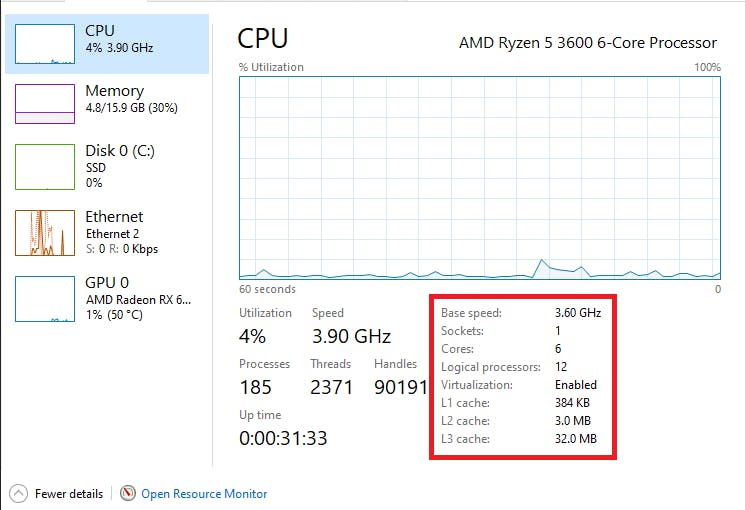

Modern processors are made up of many ‘cores’. The device you’re reading this on has at least two of these ‘cores’. A core is a processing unit of the CPU. You can think of a core as a worker in a factory. The CPU is a team of workers (all the cores) plus a supervisor (mechanisms for smooth functioning of the cores).

In a modern systems, each core in a CPU gets it’s own L1 and L2 cache. Though on slightly older systems, the L2 cache might be shared by a couple cores. The L3 cache is shared by all the cores of a system.

You can actually see the amount of cores, and cache memory on your system. On windows:

Open task manager → More details → Performance tab

Memory

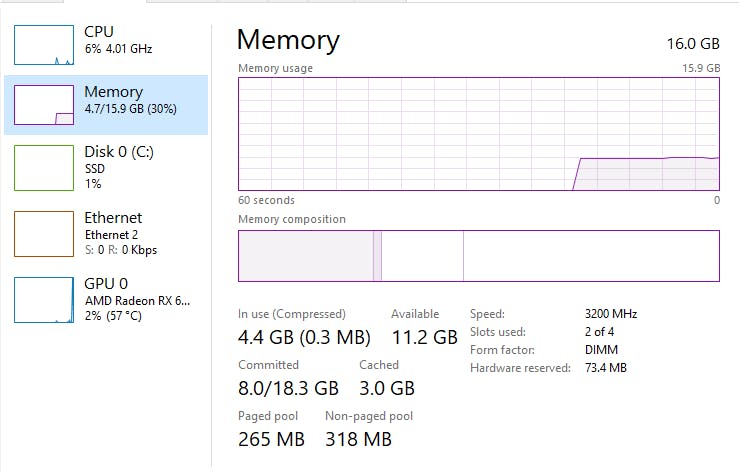

Registers and cache are part of a CPU. But another part of a computer’s memory organization is Random Access Memory (RAM), or just memory. As we learnt, registers can’t hold much data. So the bulk of the data on which the CPU works is stored in the memory.

In our example, you can think of memory as the table on which you were studying. All the books you were reading were stored on this table. You can switch between books because they are close to you, and are easily accessible to you. Similarly, all the processes running on the system are stored in memory.

Programs, the data they work on, and the results they generate are all stored in memory. In modern systems, memory is usually in the order of gigabytes, since they run lots of processes . A process is a program under execution. Look at the screenshot above. There are 185 processes running simultaneously. They take up 5.8 GB of RAM. In fact, the Operating system is also running in the RAM. Memory is volatile in nature, that is, RAM requires power to maintain memory. When there is no power ( if your laptop runs out of charge), the memory is lost, and the RAM becomes a blank slate. You can think of it as clearing the table. When you leave the library, the librarian will pick up the books you left and keep them in your place. When you come back, you’ll find that your books are not on the table.

This creates a problem. How do we store data long term, even if there’s no power present? This is where non volatile memory comes into the picture.

Secondary storage

When you couldn’t find any information in the books on your table, you searched the rest of your library. Even if your table got cleared, the books on it will go into the library shelves. Similarly, when the data in memory is not enough, or memory is cleared (power goes away), the computer uses secondary storage.

This is the bulk of a storage on a personal computer. This is generally in the form of a Hard drive (HDD) or a solid state drive (SSD).

This is non-volatile storage, i.e., even without power, the data stored on a secondary storage device persists. This is where your images, videos, games, and any other data is stored. Secondary storage can range from hundreds of gigabytes to petabytes. Based on this knowledge, we can see how data is organized, and how it is loaded in memory.

The Bootload process

On pressing the power button on your PC, the CPU does two things. First it runs a check on all the components of the system. Second, it looks for hardware errors. If it doesn’t find any, then the motherboard firmware gets control. The firmware is called the BIOS (Basic Input Output system). It is responsible for reading the Master Boot Record (MBR).

The MBR is the first sector of the disk, and it contains the Bootloader code. The bootloader code is loaded into the memory and it executes. The bootloader program loads the kernel into memory, and passes control to it. The kernel then loads any device drivers, sets up the file system, and starts the essential system processes. Finally, the kernel launches the user-mode environment, which includes the login screen and the desktop. Any user process is run using the CPUs registers, and data that is expected to be needed soon by the CPU is cached in the L1, L2 and L3 caches.

While this data might be a bit old, this picture will give you a good idea of the speed of execution in a register (0.3 Nanoseconds) vs a Disk Drive (3 Milliseconds!). Therefore, a register access is 10 million times faster than accessing Disk data. On the other hand all the Registers on a CPU together can hold a data of up to 1Kb (newer numbers will vary). A Disk Drive can even hold 5 Terabytes (the latest ones can hold up to 22TB). That means, a disk drive can hold 5 billion times the data in all the registers on a CPU.

Winding up

That’s it for today folks! Hope you liked today’s blog. Here's some sources I used and further reading on caching, cores-vs-cpu, storage, and speed-vs-size tradeoff.

Today we learnt about memory organization, and the speed-time tradeoff as we get further down the memory pyramid. We learnt about registers, caching, volatility, memory, storage, and more. Have a great day!